Bayesian Data Analysis for dummies like me

Explaining physical phenomenon consistent with observations

Bayesian data analysis is a way to iteratively building a mathemtical description of a physical phenomenon of interest using observed data.

Setup

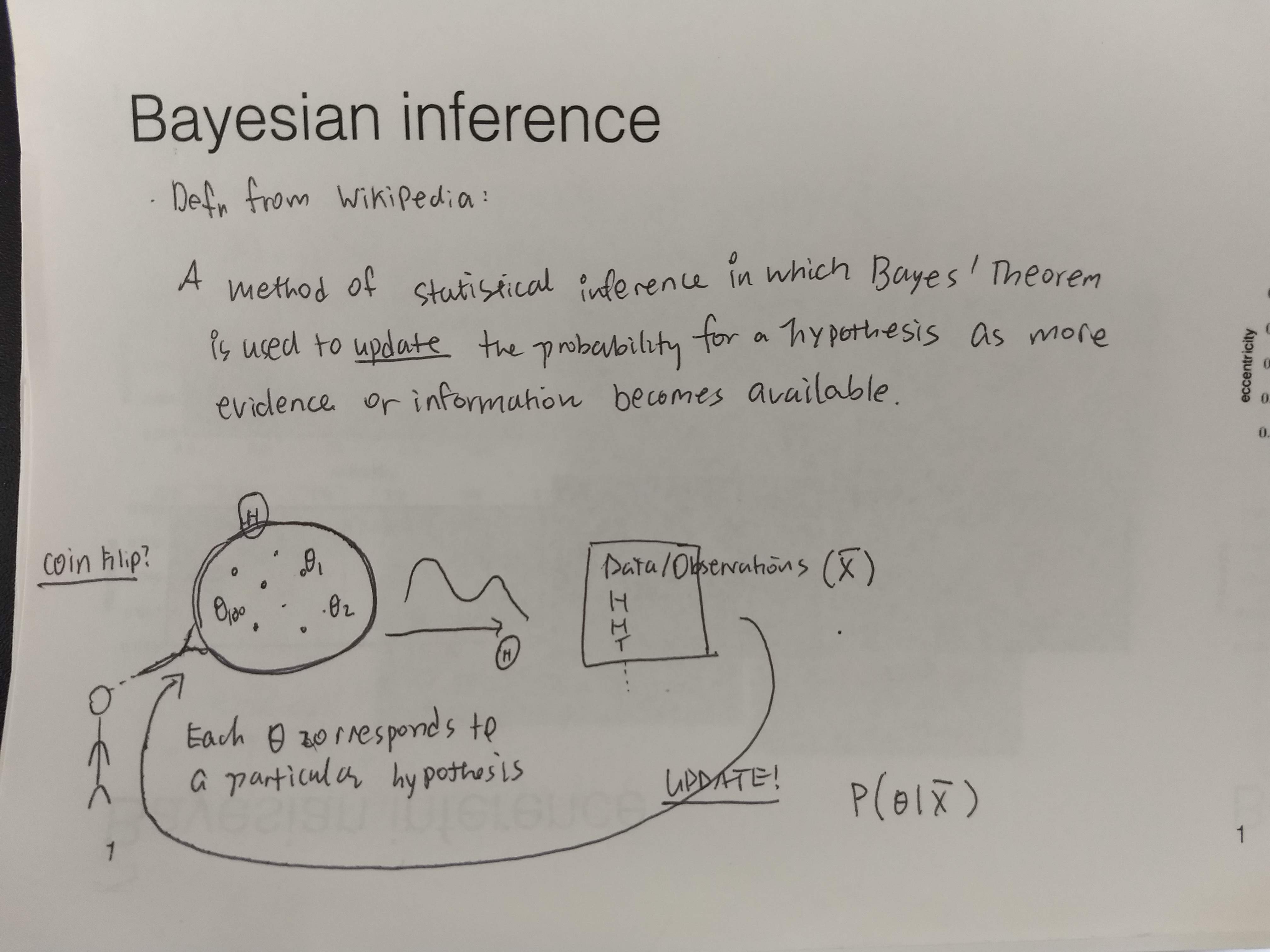

Bayesian inference is a method of statistical inference in which Bayes' Theorem is used to update the probability for a hypothesis (\(\theta\)) as more evidence or information becomes available [wikipedia].

Therefore, it is used in the following scenario. I'll refer to the workflow as the workflow of "Bayesian data analysis" following Gelman.

-

We have some physical phenomenon (aka. process) of interest that we want to describe with mathematical language. Why? because once we have the description (aka. mathematical model of the physical phenomenon), we can use it to explain how the phenomenon works as a function of its inner components, predict how it would behave as the inner components or its input variables take different values, (... any other usage of the mathematical model?)

-

We decide how to describe the phenomenon using a mathematical language by specifying:

- Variables

- Relations This is the step of "choosing a model family (aka. a statistical model)"

-

Now we have specified a family of probability models, each of which corresponds to a particular hypothesis/explanation of the physical process of interest. What we need to do is, to choose the "best" hypothesis from all of these possible hypotheses. To do so, we need to observe how the physical phenomenon manifests by collecting data of the outcomes of the phenomenon.

-

Collect data of the outcomes of the phenomenon.

-

"Learn"/"Fit" the model to the data. (aka, "estimate" the parameters (\(\theta\)) with the data). In English, this corresponds to "find a hypothesis of the phenomenon that matches the observed data "best"". To find such hypothesis \(\theta \in \Theta\), we need to define what is means to be the "best" hypothesis given the model (aka. Hypothesis space) and the observed data. We formulate this step as an optimization problem:

- choose a loss function \(L(\theta \mid \text{model}, \bar{X})\)

- Solve the optimization problem of finding argmin of the loss:

$$ \theta^{*} = \arg min ~~ L(\theta \mid \text{model}, \bar{x})$$

- Note: \(L(\theta \mid \text{model}, \bar{x}) \equiv L(\theta \mid \Theta, \bar{X})\). So we can rewrite the optimization objection as:

$$ \theta^{*} = \arg min_{\theta \in \Theta} ~~ L(\theta \mid \bar{x})$$

More specific scenario: a phenomenon with unobservable variables

Most physical phenomenon involves variables that we can't directly observe. These are called "Latent variables", and a statiscal model with such unobservable variables (in addition to observed/data variables) are called "Latent Variable Model". When we are focusing on the latent variable model, we often use \(Z\) as the latent variables and \(X\) as the data sample variable. That is, if we have \(N\) observation, the sample variable will be a vector of \(N\) data variables: \(X = {X_1, X_2, \dots , X_N }\). The general setup of Bayesian data analysis workflow above (ie. choose a model \(\rightarrow\) collect data \(\rightarrow\) fit the model to the data \(\rightarrow\) criticize the model \(\rightarrow\) repeat). We can express the bayesian data analysis workflow using these notations as follows: (Note these notations are consistent with Blei MLSS2019.)

- In English, describe what is the physical phenomenon of interest

-

Choose a statistical model by specifying

- variables (nodes in the graph)

- data variables (aka. observable variables): \(X\)

- latent variables: \(Z\)

- relations (edges in the graph)

- as a (parametrized) function of its nodes

Let's denote the set of all parameters in the model, \(\theta\). Our statistical model can be expressed as: \(P(Z,X; \theta)\).

- as a (parametrized) function of its nodes

- variables (nodes in the graph)

-

Collect data: \(\bar{X}\)

- Fit the model to the observed data

- Choose a loss function (a function wrt parameters): \(L(\theta;\bar{X})\) for \(\theta \in \Theta\)

Inference

Generally speaking, inference (which stems from the Philosophy of Science)

Bayesian inference method

Bayesian inference is a method of statistical inference in which Bayes' Theorem is used to update the probability for a hypothesis(\(\theta\)) as more evidence or information becomes available wikipedia.

- My sketch

It is not a model, it is a general method(aka. technique, algorithm) that allows to infer unknowns probabilistically via computing, eg. marginal and conditional distributions of the model, the distribution over the parameters given observed data, the conditional distribution over the latent variables given the observed data.

Since it is a general technique (or an appoarch to doing inference) that is not tied to a specific model or a problem, we can use it whenever a suitable setup is presented. In the Bayesian Data analysis workflow, I see two places where we can use the Bayes theorem to infer some unknown quantities in the model (ie. use bayesian inference to compute unknowns given knowns).

-

Use bayesian inference method to learn the model from the observed data. That is, what is the probability of the parameter of the model given observation?

$$ P(\theta \mid \bar{X})$$ -

Use bayes' theorem to compute the conditional distribution of latent variables given observed data and a fixed parameter (eg. the learned parameter from step 1)

$$ P(Z \mid \bar{X}, \bar{\theta})$$

Note: I was living in the smog under the impression that "Bayesian inference" is tied to either 1 or 2. But now I understand "bayesian inference" just means computing probability distribution over the unknowns (either because they are unobservable (ie. conditional distribution of latent variables given observed data), or a subset of variables (ie. marginal distribution) that requires further computation on the joint distribution (aka. the probability model)). So, as Wikipedia's definition clarifies, anytime we have a quantity (with prior distribution) and make observations regarding a relevant process, we can update the prior distribution using the observed data via Bayes Theorem. That is all that is in the intimidating word "Bayesian inference". Gosh, can we please give another name to this way of doing computation with a probability model and data assumed to come from the probability model? "Inference" is such intimidating word. I feel like I need to do philosophy to use this word and everytime I hear this term, I feel like I never understand what the heck it is about because I don't understand what inference means in Philosophy. Yikes! :[

Approximate Inference

When we cannot compute the "flipped" probability ("flipped" using the Bayes Theorem) because it is, for example, too computationally expensive, we sometimes resort to an approximation of the true "flipped" probability.

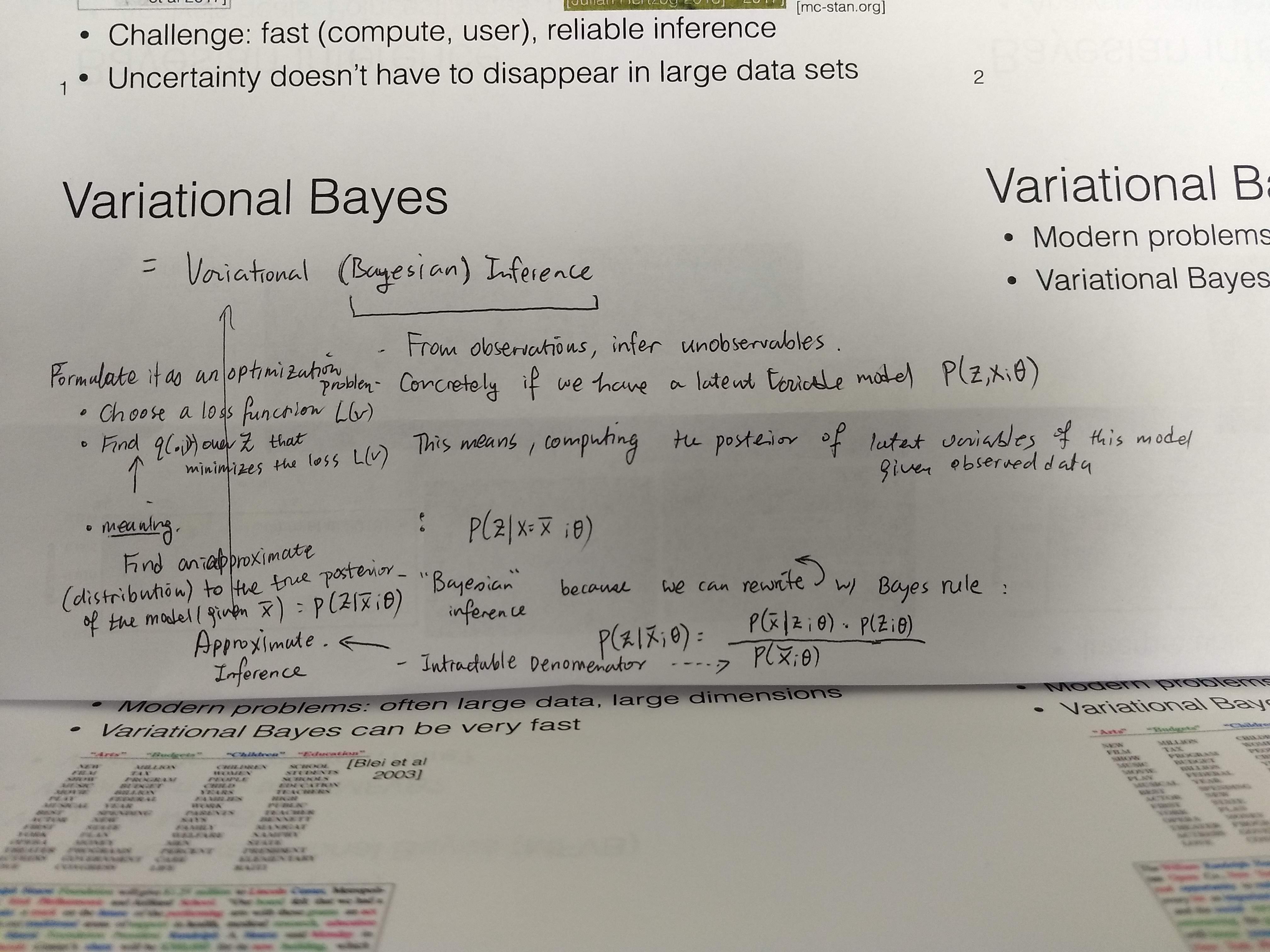

Variational Approximate Inference

People call this "Variational Bayes", which I find it very loaded and unclear whether if the term refers to a method of inference or some model family because both "V" and "B" are captialized and it gives me an impressions that it's a name of some specific class of probability distributions. Yikes2! :[ Please give another name to it.

Variational Approximate Bayesian Inference is:

a method of finding a "good" approximate distribution to the "flipped" distribution of your probability model (ie. \(P\) with a fixed parameter \(\bar{\theta}\)) (ie2: "flipped" using Bayes theorem given your probability model) by formulating a proper optimization problem.

So far, we have discussed about "bayesian inference", and the need to sometimes be content with an "approximation" to the "flipped" distribution (given a fixed parameter and observed data). The last thing to understand is the "variational" part, which correpsonds to formulating the search for a "good" approximation distribution as an optimization problem. As usual for an optimization problem, we need to define "goodness", or in this case "loss"

- Sketch for understanding the motivation for variational bayesian inference method (aka. Variational Bayes)

To be continued...