Short note on coarse-graining

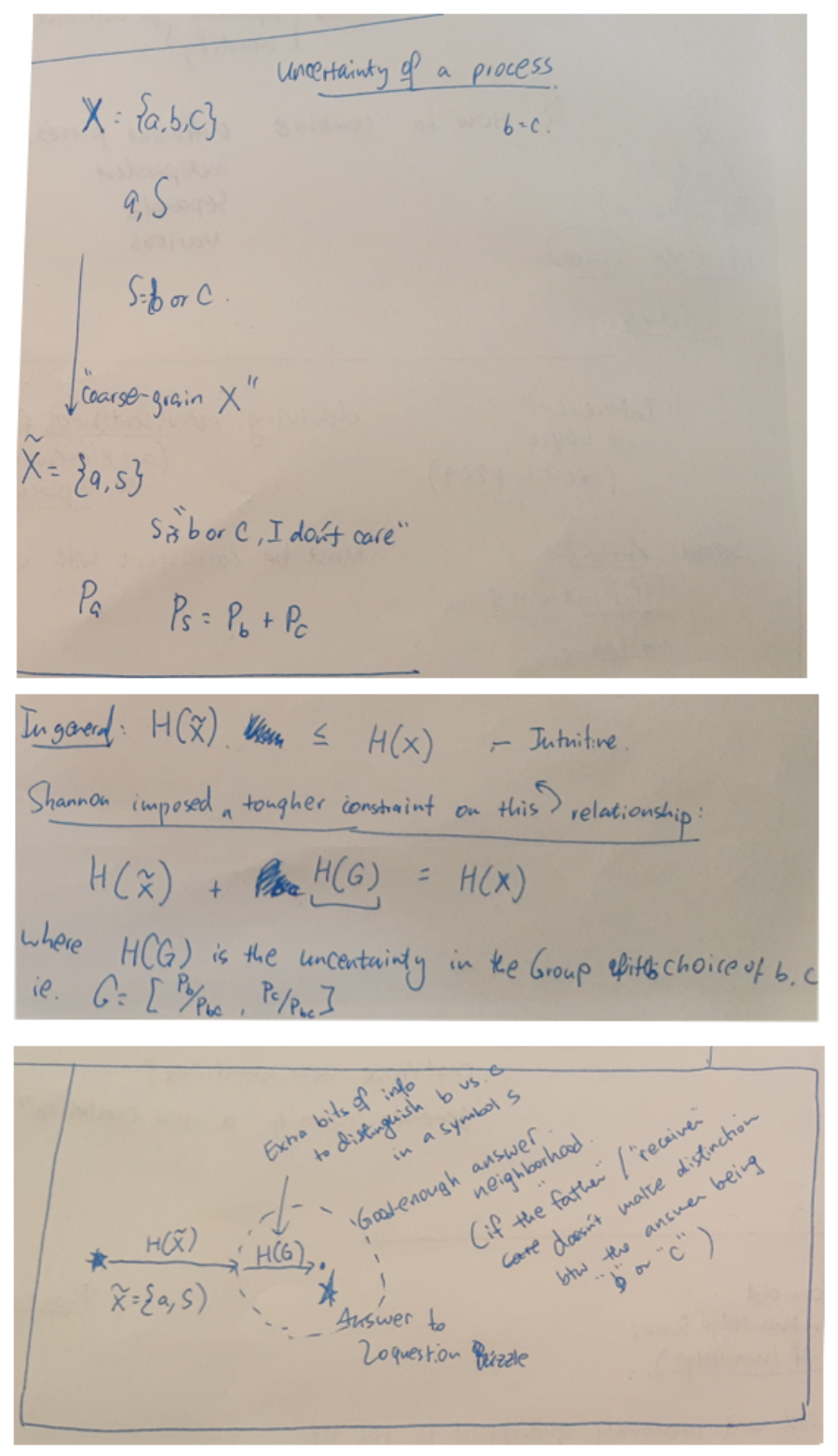

One of the axioms in Shannon's information theory is that (Shannon's) entropy satisfies coarse-graining property:

This property is closely related to the conditional probabilities. In communication -- regardless of the types of agents involved, eg. between the people over a phone, between parent cell's DNA to daughter cell's DNA, between a disk storage at time T and that at time T+10), or between me (the writer of this article) and you (the reader), there is some 'tolerance' bound that allows "good-enough" intention/semantics to be transmitted and understood between the sender and the receiver. How is this idea related to the Rate-Distortion theory or error-correcting codes? Can this idea help us understand/define the "semantic" information (vs. Shannon's Information measure is often called "syntactic" because it is ignorant/invariant to the identities of the events whose probabilities within the process we are measuring the uncertainty of).

Pondering...

- Coarse-graining/level of details when describing a process

- As we 'abstract' away from particular representational form of an event/instance, we move from semantics+form domain to → → → semantics+less form domain. This allows me to say "The chair is blue" and you understand what general color the chair is.

- At which level of abstraction / this ladder of coarse-graining, do we get sufficient (ie. good-enough to communication our intentions) level of semantics?

- If we measure $H(\tilde{X})$ at that level, can we say that quantity measures 'semantic information'?

- The difference $H(G)$ is the force/gradient that drives the flow of information -- information of what?